Tzu-Yuan (Justin) Lin

Hello! I’m a Postdoctoral Associate at the MIT Biomimetic Robotics Lab, working with Prof. Sangbae Kim. Prior to that, I earned my Ph.D. in Robotics at the University of Michigan, under the guidance of Prof. Maani Ghaffari.

My research interests lie in the intersection of machine learning and robot perception. I’m particularly interested in exploring the symmetry structure of a system to develop efficient and generalizable robot algorithms. In the past, I have worked on robot visual odometry, state estimation, representation learning, and geometric deep learning. More recently, I’m working on generalizable robot perception for manipulation tasks.

Prior to my Ph.D. journey, I obtained my M.S. in Robotics at the University of Michigan. Before that, I received my B.S. in Mechanical Engineering from National Taiwan University.

If you find any of my work interesting, feel free to reach out to me at tzuyuan at umich dot edu. I’m happy to chat!

News

- [03/2025] I moved to Boston and joined the MIT Biomimetic Robotics Lab as a postoctoral associate! Super excited for the interesting projects I will be doing here. :D

- [12/2024] I successfully defended my Ph.D. thesis! You can watch the recording here.

- [10/2024] Chien Erh (Cynthia) Lin and I gave a keynote talk at the IROS equivariant robotics workshop.

- [06/2024] Chien Erh (Cynthia) Lin and I gave a joint Robotics Seminar at the University of Notre Dame, “It’s the Same Everywhere: Leveraging Symmetry for Robot Perception and Localization.”

- [05/2024] Our paper Lie Neurons got accepted by ICML 2024!

- [02/2024] I gave a guest lecture in ROB 530: Mobile Robotics at the University of Michigan.

- [11/2023] I passed my thesis proposal!

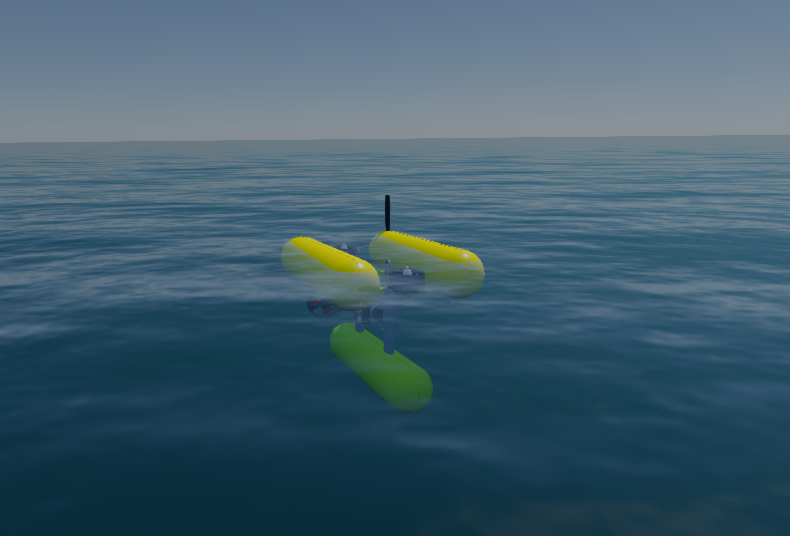

Robot I Have Worked with

I’m lucky enough to work with several different robots in the past!